Neural Networks For Chess

Free Book

Donations are welcome:

Contents

AlphaZero, Leela Chess Zero and Stockfish NNUE revolutionized Computer Chess. This book gives a complete introduction into the technical inner workings of such engines.

The book is split into four chapters:

-

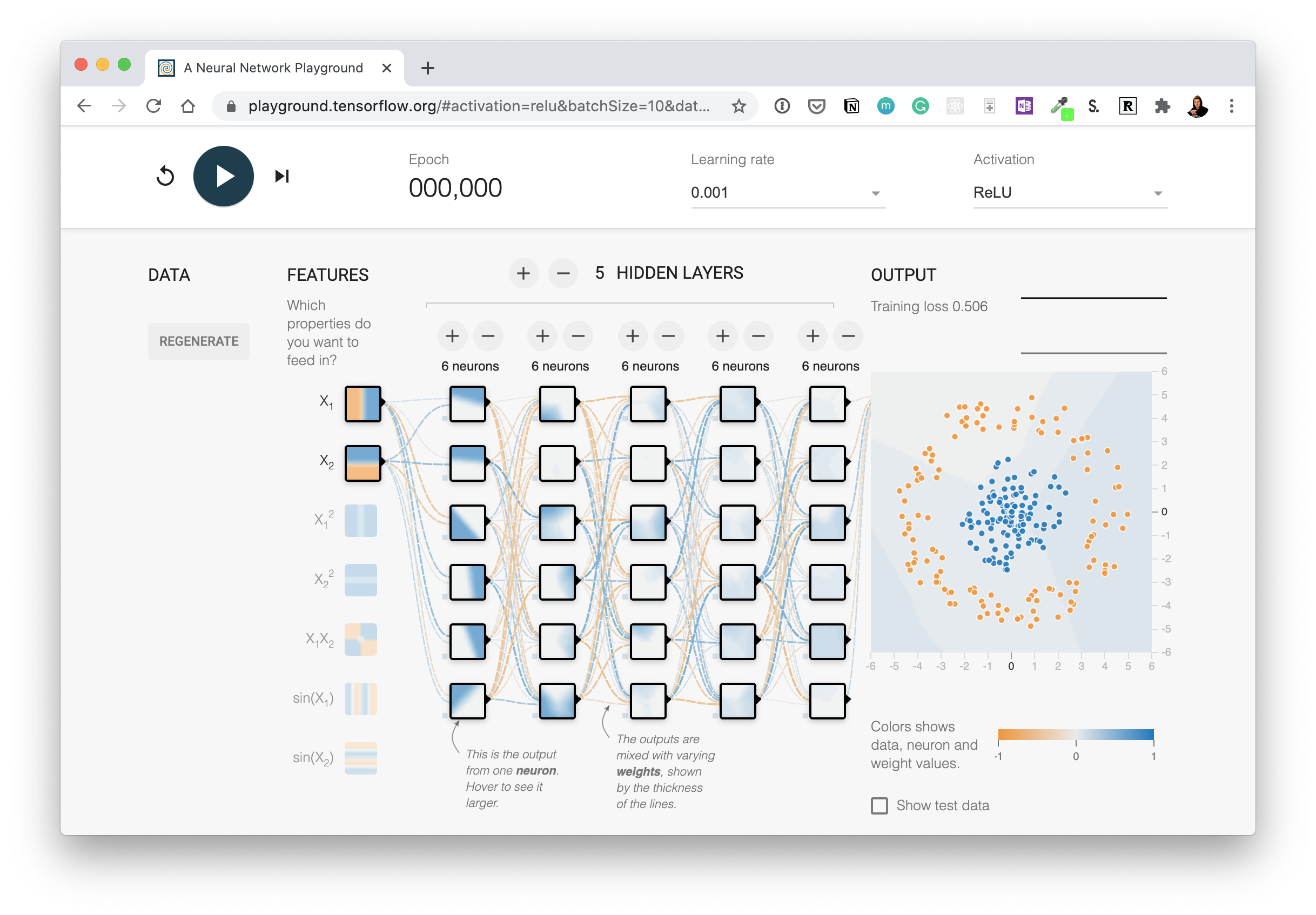

The first chapter introduces neural networks and covers all the basic building blocks that are used to build deep networks such as those used by AlphaZero. Contents include the perceptron, back-propagation and gradient descent, classification, regression, multilayer perpectron, vectorization techniques, convolutional netowrks, squeeze and exciation networks, fully connected networks, batch normalization and rectified linear units, residual layers, overfitting and underfitting.

-

The second chapter introduces classical search techniques used for chess engines as well as those used by AlphaZero. Contents include minimax, alpha-beta search, and Monte Carlo tree search.

-

The third chapter shows how modern chess engines are designed. Aside from the ground-breaking AlphaGo, AlphaGo Zero and AlphaZero we cover Leela Chess Zero, Fat Fritz, Fat Fritz 2 and Effectively Updateable Neural Networks (NNUE) as well as Maia.

-

The fourth chapter is about implementing a miniaturized AlphaZero. Hexapawn, a minimalistic version of chess, is used as an example for that. Hexapawn is solved by minimax search and training positions for supervised learning are generated. Then as a comparison, an AlphaZero-like training loop is implemented where training is done via self-play combined with reinforcement learning. Finally, AlphaZero-like training and supervised training are compared.

Source Code

Just clone this repository or directly browse the files. You will find here all sources of the examples of the book.

About

During COVID, I worked a lot from home and saved approximately 1.5 hours of commuting time each day. I decided to use that time to do something useful (?) and wrote a book about computer chess. In the end I decided to release the book for free.

Profits

To be completely transparent, here is what I make from every paper copy sold on Amazon. The book retails for $16.95 (about 15 Euro).

- printing costs $4.04

- Amazon takes $6.78

- my royalties are $6.13

Errata

If you find mistakes, please report them here - your help is appreciated!

![[IJCAI-2021] A benchmark of data-free knowledge distillation from paper](https://github.com/zju-vipa/DataFree/raw/main/assets/cmi.png)