TransGAN: Two Transformers Can Make One Strong GAN [YouTube Video]

Paper Authors: Yifan Jiang, Shiyu Chang, Zhangyang Wang

CVPR 2021

This is re-implementation of TransGAN: Two Transformers Can Make One Strong GAN, and That Can Scale Up, CVPR 2021 in PyTorch.

Generative Adversarial Networks-GAN builded completely free of Convolutions and used Transformers architectures which became popular since Vision Transformers-ViT. In this implementation, CIFAR-10 dataset was used.

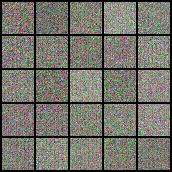

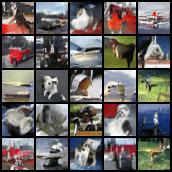

| 0 Epoch | 40 Epoch | 100 Epoch | 200 Epoch |

|

|

|

|

Related Work - Vision Transformers (ViT)

In this implementation, as a discriminator, Vision Transformer(ViT) Block was used. In order to get more info about ViT, you can look at the original paper here

Credits for illustration of ViT: @lucidrains

Installation

Before running train.py, check whether you have libraries in requirements.txt! Also, create ./fid_stat folder and download the fid_stats_cifar10_train.npz file in this folder. To save your model during training, create ./checkpoint folder using mkdir checkpoint.

Training

python train.py

Pretrained Model

You can find pretrained model here. You can download using:

wget https://drive.google.com/file/d/134GJRMxXFEaZA0dF-aPpDS84YjjeXPdE/view

or

curl gdrive.sh | bash -s https://drive.google.com/file/d/134GJRMxXFEaZA0dF-aPpDS84YjjeXPdE/view

License

MIT

Citation

@article{jiang2021transgan,

title={TransGAN: Two Transformers Can Make One Strong GAN},

author={Jiang, Yifan and Chang, Shiyu and Wang, Zhangyang},

journal={arXiv preprint arXiv:2102.07074},

year={2021}

}

@article{dosovitskiy2020,

title={An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale},

author={Dosovitskiy, Alexey and Beyer, Lucas and Kolesnikov, Alexander and Weissenborn, Dirk and Zhai, Xiaohua and Unterthiner, Thomas and Dehghani, Mostafa and Minderer, Matthias and Heigold, Georg and Gelly, Sylvain and Uszkoreit, Jakob and Houlsby, Neil},

journal={arXiv preprint arXiv:2010.11929},

year={2020}

}

@inproceedings{zhao2020diffaugment,

title={Differentiable Augmentation for Data-Efficient GAN Training},

author={Zhao, Shengyu and Liu, Zhijian and Lin, Ji and Zhu, Jun-Yan and Han, Song},

booktitle={Conference on Neural Information Processing Systems (NeurIPS)},

year={2020}

}