ArcaneGAN by Alex Spirin

Changelog

- 2021-12-12 ArcaneGAN v0.3 is live

- 2021-12-09 Thanks to ak92501 we now have a huggingface demo

ArcaneGAN v0.3

Videos processed by the huggingface video inference colab.

obama2.mp4

ryan2.mp4

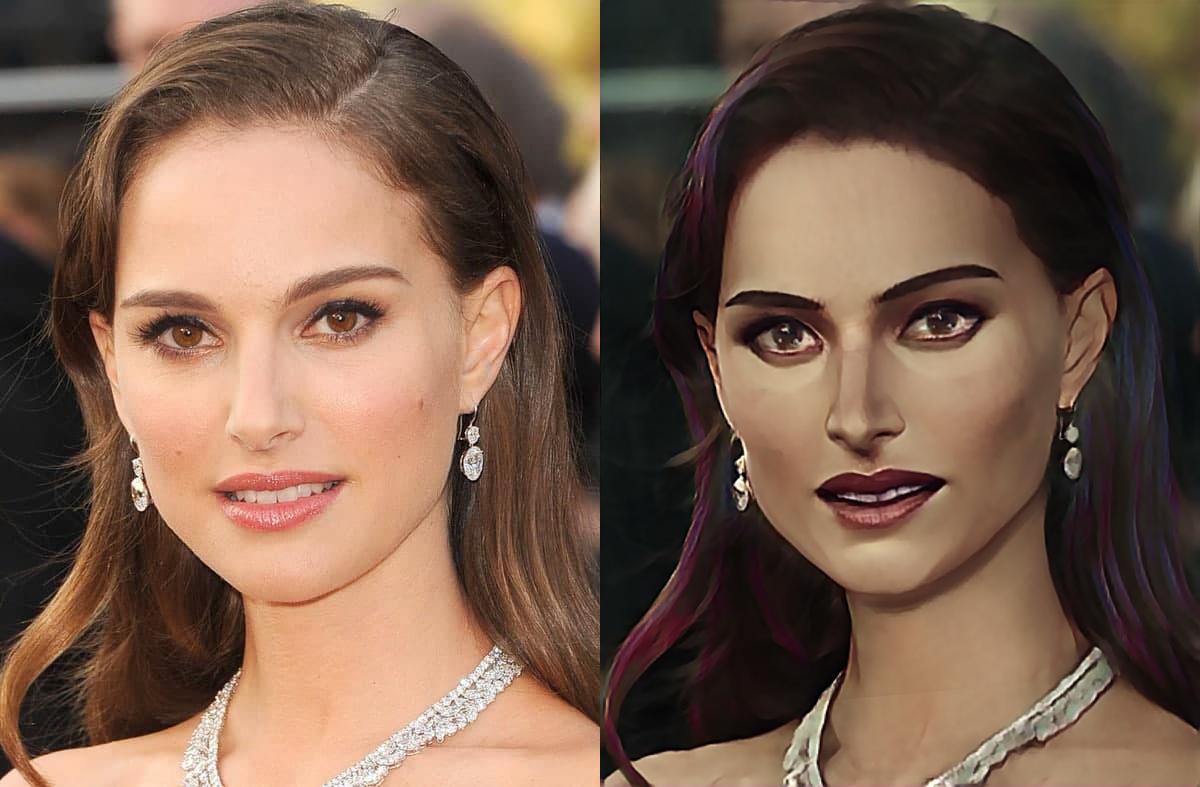

Image samples

Faces were enhanced via GPEN before applying the ArcaneGAN v0.3 filter.

ArcaneGAN v0.2

The release is here

Implementation Details

It does something, but not much at the moment.

The model is a pytroch *.jit of a fastai v1 flavored u-net trained on a paired dataset, generated via a blended stylegan2. You can see the blending colab I've used here.