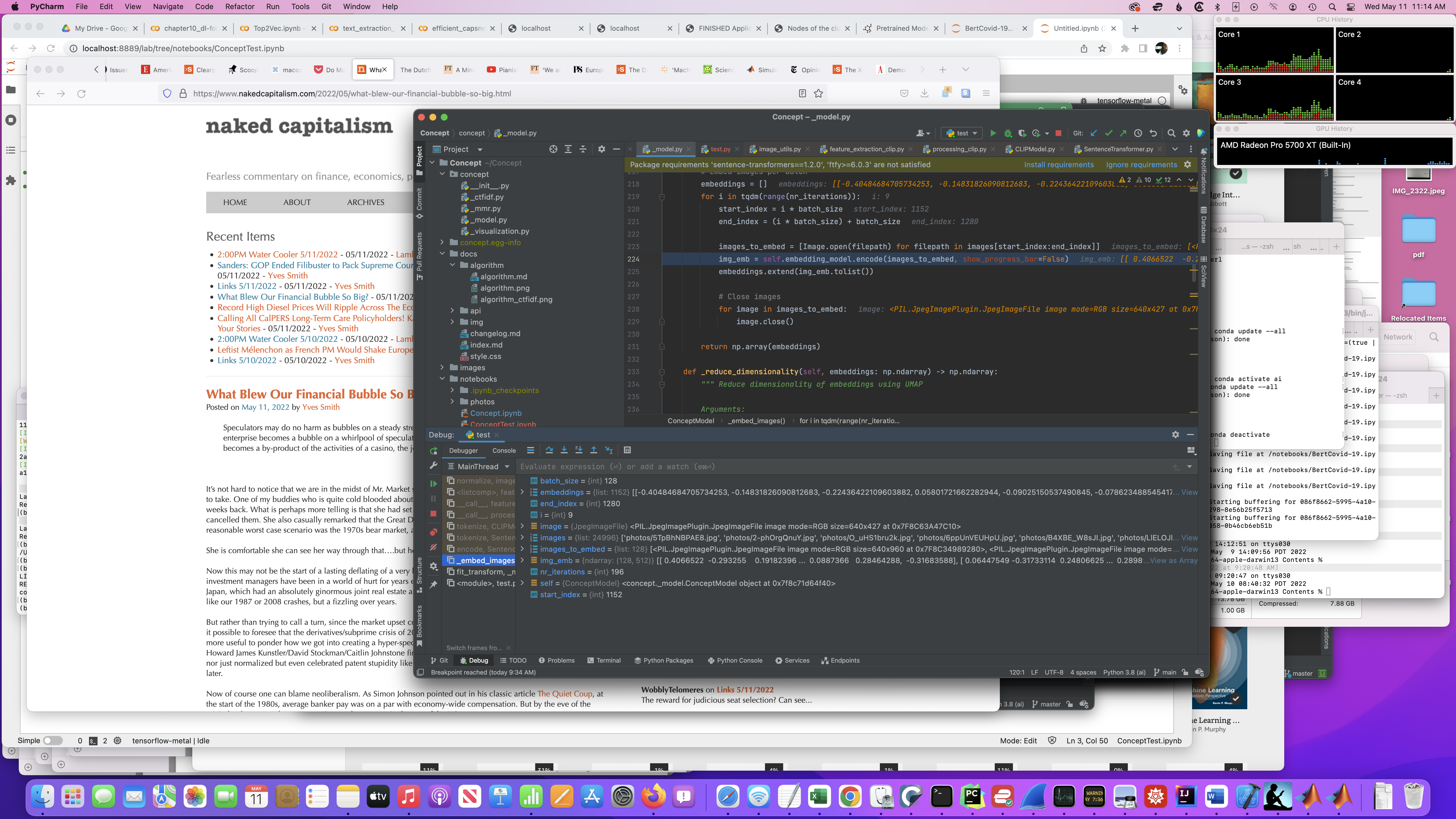

Running a Concept example on OS S Monterey 12.3.1

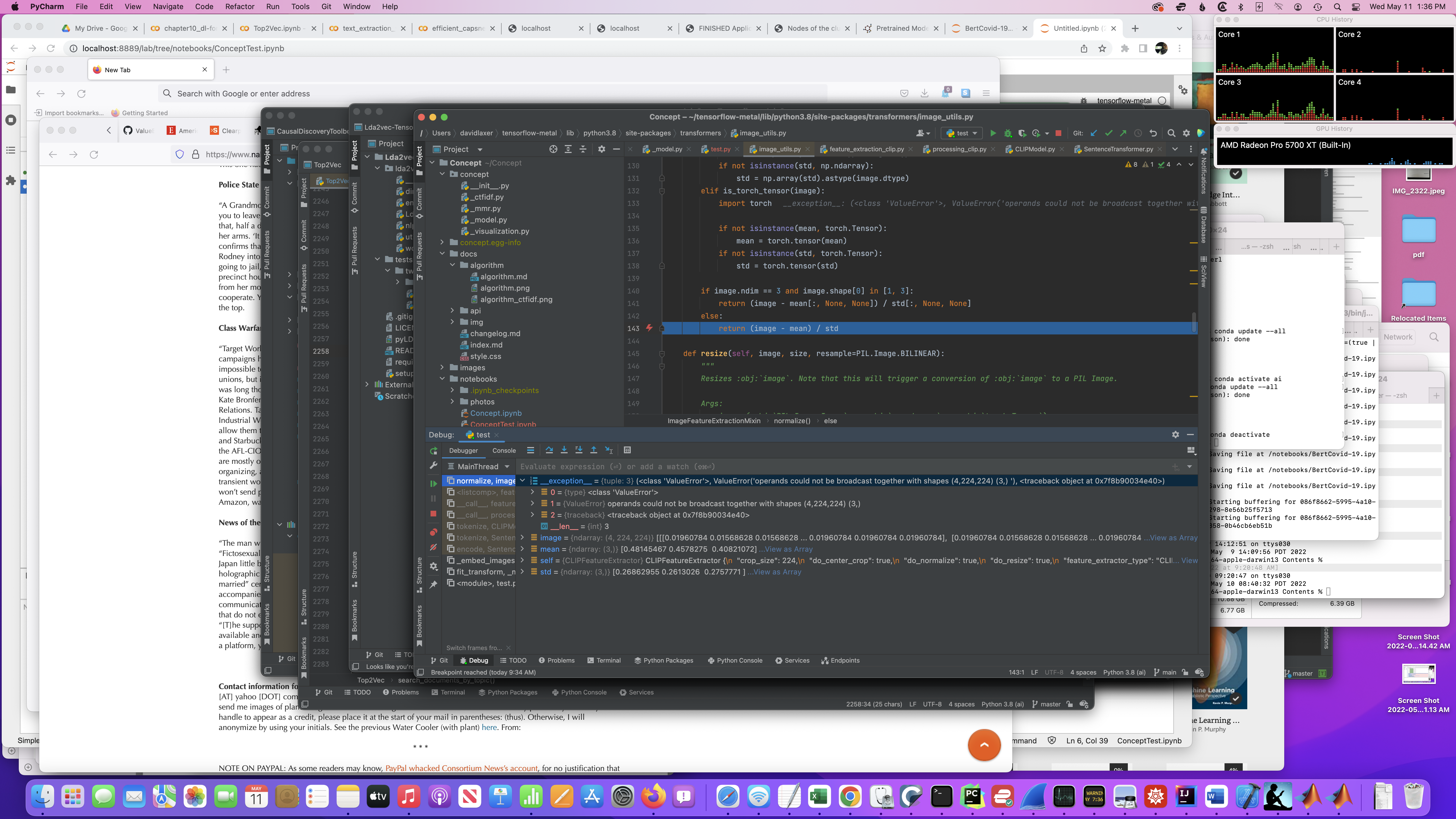

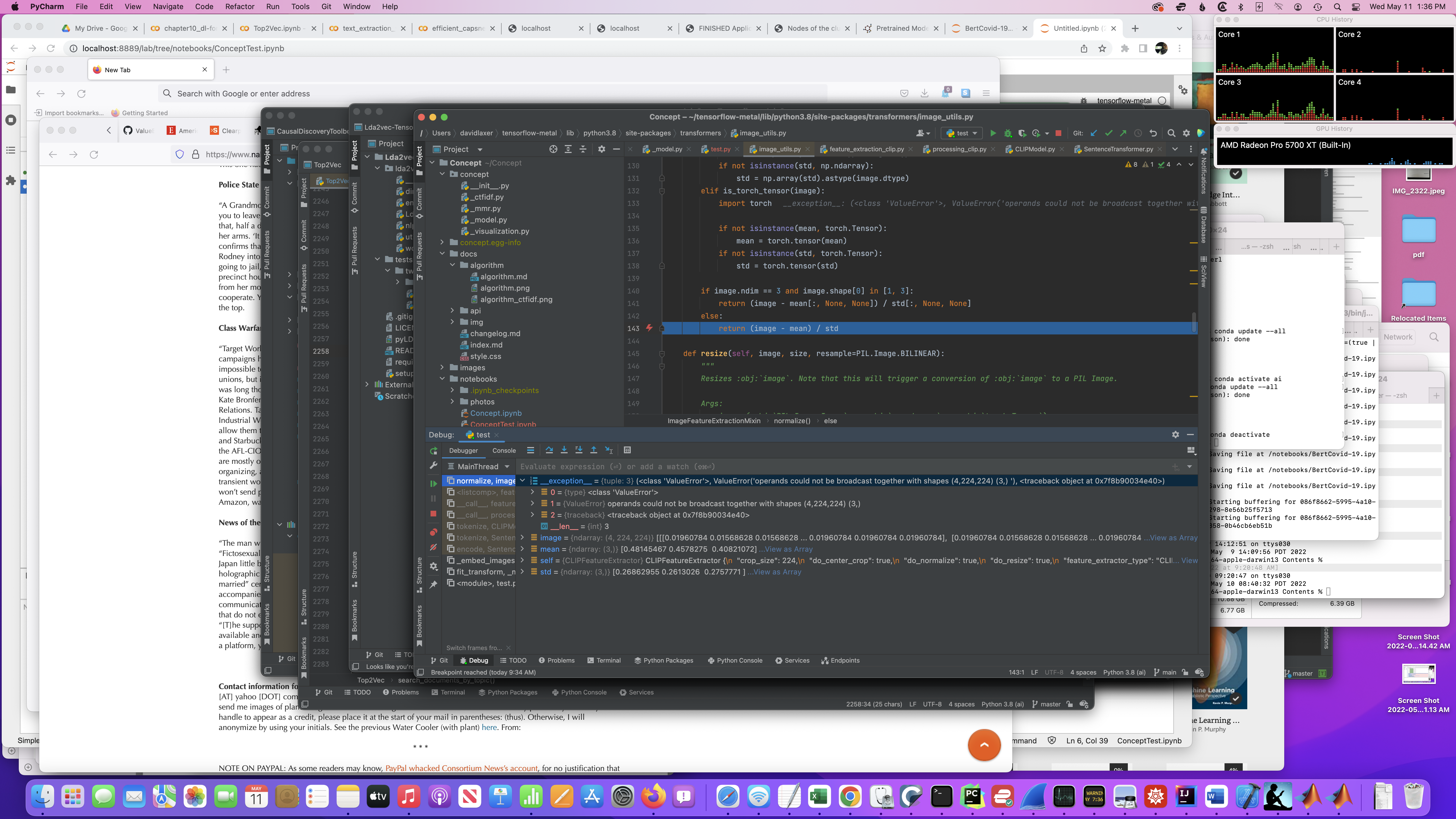

...Transformers/Image_utils #143:

return (image - mean) / std

image is (4,224,224)

mean is (3,)

std is (3,)

Python 3.8.13

% pip show tensorflow_macos

WARNING: Ignoring invalid distribution -umpy (/Users/davidlaxer/tensorflow-metal/lib/python3.8/site-packages)

Name: tensorflow-macos

Version: 2.8.0

Summary: TensorFlow is an open source machine learning framework for everyone.

Home-page: https://www.tensorflow.org/

Author: Google Inc.

Author-email: [email protected]

License: Apache 2.0

Location: /Users/davidlaxer/tensorflow-metal/lib/python3.8/site-packages

Requires: absl-py, astunparse, flatbuffers, gast, google-pasta, grpcio, h5py, keras, keras-preprocessing, libclang, numpy, opt-einsum, protobuf, setuptools, six, tensorboard, termcolor, tf-estimator-nightly, typing-extensions, wrapt

Required-by:

pip show sentence_transformers

WARNING: Ignoring invalid distribution -umpy (/Users/davidlaxer/tensorflow-metal/lib/python3.8/site-packages)

Name: sentence-transformers

Version: 2.1.0

Summary: Sentence Embeddings using BERT / RoBERTa / XLM-R

Home-page: https://github.com/UKPLab/sentence-transformers

Author: Nils Reimers

Author-email: [email protected]

License: Apache License 2.0

Location: /Users/davidlaxer/tensorflow-metal/lib/python3.8/site-packages

Requires: huggingface-hub, nltk, numpy, scikit-learn, scipy, sentencepiece, tokenizers, torch, torchvision, tqdm, transformers

Required-by: bertopic, concept

% pip show transformers

WARNING: Ignoring invalid distribution -umpy (/Users/davidlaxer/tensorflow-metal/lib/python3.8/site-packages)

Name: transformers

Version: 4.11.3

Summary: State-of-the-art Natural Language Processing for TensorFlow 2.0 and PyTorch

Home-page: https://github.com/huggingface/transformers

Author: Thomas Wolf, Lysandre Debut, Victor Sanh, Julien Chaumond, Sam Shleifer, Patrick von Platen, Sylvain Gugger, Suraj Patil, Stas Bekman, Google AI Language Team Authors, Open AI team Authors, Facebook AI Authors, Carnegie Mellon University Authors

Author-email: [email protected]

License: Apache

Location: /Users/davidlaxer/tensorflow-metal/lib/python3.8/site-packages

Requires: filelock, huggingface-hub, numpy, packaging, pyyaml, regex, requests, sacremoses, tokenizers, tqdm

Required-by: sentence-transformers

Here's the code:

import os

import glob

import zipfile

from tqdm import tqdm

from sentence_transformers import util

# 25k images from Unsplash

img_folder = 'photos/'

if not os.path.exists(img_folder) or len(os.listdir(img_folder)) == 0:

os.makedirs(img_folder, exist_ok=True)

photo_filename = 'unsplash-25k-photos.zip'

if not os.path.exists(photo_filename): # Download dataset if does not exist

util.http_get('http://sbert.net/datasets/' + photo_filename, photo_filename)

# Extract all images

with zipfile.ZipFile(photo_filename, 'r') as zf:

for member in tqdm(zf.infolist(), desc='Extracting'):

zf.extract(member, img_folder)

img_names = list(glob.glob('photos/*.jpg'))

from concept import ConceptModel

concept_model = ConceptModel()

concepts = concept_model.fit_transform(img_names)

B/s]

0%| | 0/196 [00:00<?, ?it/s]/Users/davidlaxer/tensorflow-metal/lib/python3.8/site-packages/transformers/feature_extraction_utils.py:158: UserWarning: Creating a tensor from a list of numpy.ndarrays is extremely slow. Please consider converting the list to a single numpy.ndarray with numpy.array() before converting to a tensor. (Triggered internally at ../torch/csrc/utils/tensor_new.cpp:201.)

tensor = as_tensor(value)

5%|█▉ | 9/196 [02:21<48:54, 15.69s/it]

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

Input In [2], in <cell line: 3>()

1 from concept import ConceptModel

2 concept_model = ConceptModel()

----> 3 concepts = concept_model.fit_transform(img_names)

File ~/Concept/concept/_model.py:120, in ConceptModel.fit_transform(self, images, docs, image_names, image_embeddings)

118 # Calculate image embeddings if not already generated

119 if image_embeddings is None:

--> 120 image_embeddings = self._embed_images(images)

122 # Reduce dimensionality and cluster images into concepts

123 reduced_embeddings = self._reduce_dimensionality(image_embeddings)

File ~/Concept/concept/_model.py:224, in ConceptModel._embed_images(self, images)

221 end_index = (i * batch_size) + batch_size

223 images_to_embed = [Image.open(filepath) for filepath in images[start_index:end_index]]

--> 224 img_emb = self.embedding_model.encode(images_to_embed, show_progress_bar=False)

225 embeddings.extend(img_emb.tolist())

227 # Close images

File ~/tensorflow-metal/lib/python3.8/site-packages/sentence_transformers/SentenceTransformer.py:153, in SentenceTransformer.encode(self, sentences, batch_size, show_progress_bar, output_value, convert_to_numpy, convert_to_tensor, device, normalize_embeddings)

151 for start_index in trange(0, len(sentences), batch_size, desc="Batches", disable=not show_progress_bar):

152 sentences_batch = sentences_sorted[start_index:start_index+batch_size]

--> 153 features = self.tokenize(sentences_batch)

154 features = batch_to_device(features, device)

156 with torch.no_grad():

File ~/tensorflow-metal/lib/python3.8/site-packages/sentence_transformers/SentenceTransformer.py:311, in SentenceTransformer.tokenize(self, texts)

307 def tokenize(self, texts: Union[List[str], List[Dict], List[Tuple[str, str]]]):

308 """

309 Tokenizes the texts

310 """

--> 311 return self._first_module().tokenize(texts)

File ~/tensorflow-metal/lib/python3.8/site-packages/sentence_transformers/models/CLIPModel.py:71, in CLIPModel.tokenize(self, texts)

68 if len(images) == 0:

69 images = None

---> 71 inputs = self.processor(text=texts_values, images=images, return_tensors="pt", padding=True)

72 inputs['image_text_info'] = image_text_info

73 return inputs

File ~/tensorflow-metal/lib/python3.8/site-packages/transformers/models/clip/processing_clip.py:148, in CLIPProcessor.__call__(self, text, images, return_tensors, **kwargs)

145 encoding = self.tokenizer(text, return_tensors=return_tensors, **kwargs)

147 if images is not None:

--> 148 image_features = self.feature_extractor(images, return_tensors=return_tensors, **kwargs)

150 if text is not None and images is not None:

151 encoding["pixel_values"] = image_features.pixel_values

File ~/tensorflow-metal/lib/python3.8/site-packages/transformers/models/clip/feature_extraction_clip.py:150, in CLIPFeatureExtractor.__call__(self, images, return_tensors, **kwargs)

148 images = [self.center_crop(image, self.crop_size) for image in images]

149 if self.do_normalize:

--> 150 images = [self.normalize(image=image, mean=self.image_mean, std=self.image_std) for image in images]

152 # return as BatchFeature

153 data = {"pixel_values": images}

File ~/tensorflow-metal/lib/python3.8/site-packages/transformers/models/clip/feature_extraction_clip.py:150, in <listcomp>(.0)

148 images = [self.center_crop(image, self.crop_size) for image in images]

149 if self.do_normalize:

--> 150 images = [self.normalize(image=image, mean=self.image_mean, std=self.image_std) for image in images]

152 # return as BatchFeature

153 data = {"pixel_values": images}

File ~/tensorflow-metal/lib/python3.8/site-packages/transformers/image_utils.py:143, in ImageFeatureExtractionMixin.normalize(self, image, mean, std)

141 return (image - mean[:, None, None]) / std[:, None, None]

142 else:

--> 143 return (image - mean) / std

ValueError: operands could not be broadcast together with shapes (4,224,224) (3,)

The exception is in the normalize() function ... I believe in the 9th Pil image: