I've created this Blender scene where I have a dice, and I'm using ZPY to generate a dataset composed of images obtained by rotating around the object and jittering both the dice position and the camera. Everything seems to be working properly, but the bounding-boxes generated on the annotation file get progressively worse with each picture.

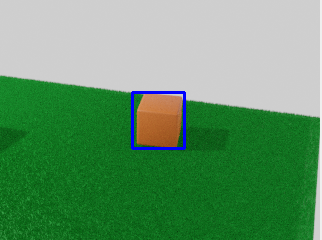

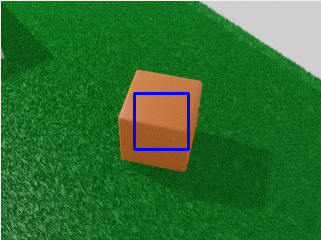

For example this is the first image's bounding-box:

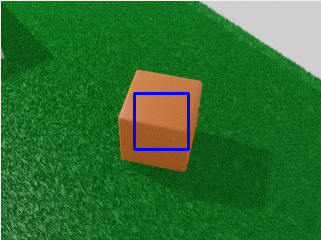

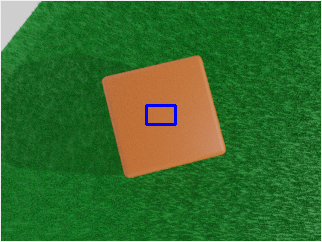

This one we get halfway through:

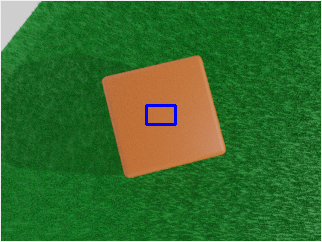

And this is one of the last ones:

This is my code (I've cut some stuff, I can't paste it all for some reason):

def run(num_steps = 20):

# Random seed results in unique behavior

zpy.blender.set_seed()

# Create the saver object

saver = zpy.saver_image.ImageSaver(description="Domain randomized dado")

# Add the dado category

dado_seg_color = zpy.color.random_color(output_style="frgb")

saver.add_category(name="dado", color=dado_seg_color)

# Segment Suzzanne (make sure a material exists for the object!)

zpy.objects.segment("dado", color=dado_seg_color)

# Original dice pose

zpy.objects.save_pose("dado", "dado_pose_og")

#Original camera pose

zpy.objects.save_pose("Camera", "Camera_pose_og")

# Save the positions of objects so we can jitter them later

zpy.objects.save_pose("Camera", "cam_pose")

zpy.objects.save_pose("dado", "dado_pose")

asset_dir = Path(bpy.data.filepath).parent

texture_paths = [

asset_dir / Path("textures/madera.png"),

asset_dir / Path("textures/ladrillo.png"),

]

# Run the sim.

for step_idx in range(num_steps):

# Example logging

# stp = zpy.blender.step()

# print("BLENDER STEPS: ", stp.num_steps)

# log.debug("This is a debug log")

# Return camera and dado to original positions

zpy.objects.restore_pose("Camera", "cam_pose")

zpy.objects.restore_pose("dado", "dado_pose")

# Rotate camera

location = bpy.context.scene.objects["Camera"].location

angle = step_idx*360/num_steps

location = rotate(location, angle, axis=(0, 0, 1))

bpy.data.objects["Camera"].location = location

# Jitter dado pose

zpy.objects.jitter(

"dado",

translate_range=((-300, 300), (-300, 300), (0, 0)),

rotate_range=(

(0, 0),

(0, 0),

(-math.pi, math.pi),

),

)

# Jitter the camera pose

zpy.objects.jitter(

"Camera",

translate_range=(

(-5, 5),

(-5, 5),

(-5, 5),

),

)

# Camera should be looking at dado

zpy.camera.look_at("Camera", bpy.data.objects["dado"].location)

texture_path = random.choice(texture_paths)

# HDRIs are like a pre-made background with lighting

# zpy.hdris.random_hdri()

# Pick a random texture from the 'textures' folder (relative to blendfile)

# Textures are images that we will map onto a material

new_mat = zpy.material.make_mat_from_texture(texture_path)

# zpy.material.set_mat("dado", new_mat)

# Have to segment the new material

zpy.objects.segment("dado", color=dado_seg_color)

# Jitter the dado material

# zpy.material.jitter(bpy.data.objects["dado"].active_material)

# Jitter the HSV for empty and full images

'''

hsv = (

random.uniform(0.49, 0.51), # (hue)

random.uniform(0.95, 1.1), # (saturation)

random.uniform(0.75, 1.2), # (value)

)

'''

# Name for each of the output images

rgb_image_name = zpy.files.make_rgb_image_name(step_idx)

iseg_image_name = zpy.files.make_iseg_image_name(step_idx)

depth_image_name = zpy.files.make_depth_image_name(step_idx)

# Render image

zpy.render.render(

rgb_path=saver.output_dir / rgb_image_name,

iseg_path=saver.output_dir / iseg_image_name,

depth_path=saver.output_dir / depth_image_name,

# hsv=hsv,

)

# Add images to saver

saver.add_image(

name=rgb_image_name,

style="default",

output_path=saver.output_dir / rgb_image_name,

frame=step_idx,

)

saver.add_image(

name=iseg_image_name,

style="segmentation",

output_path=saver.output_dir / iseg_image_name,

frame=step_idx,

)

saver.add_image(

name=depth_image_name,

style="depth",

output_path=saver.output_dir / depth_image_name,

frame=step_idx,

)

# Add annotation to segmentation image

saver.add_annotation(

image=rgb_image_name,

seg_image=iseg_image_name,

seg_color=dado_seg_color,

category="dado",

)

# Write out annotations

saver.output_annotated_images()

saver.output_meta_analysis()

# ZUMO Annotations

zpy.output_zumo.OutputZUMO(saver).output_annotations()

# COCO Annotations

zpy.output_coco.OutputCOCO(saver).output_annotations()

# Volver al estado inicial

zpy.objects.restore_pose("dado", "dado_pose_og")

zpy.objects.restore_pose("Camera", "Camera_pose_og")

Is this my fault or an actual bug?

- OS: Ubuntu 20.04

- Python 3.9

- Blender 2.93

- zpy: latest

bug