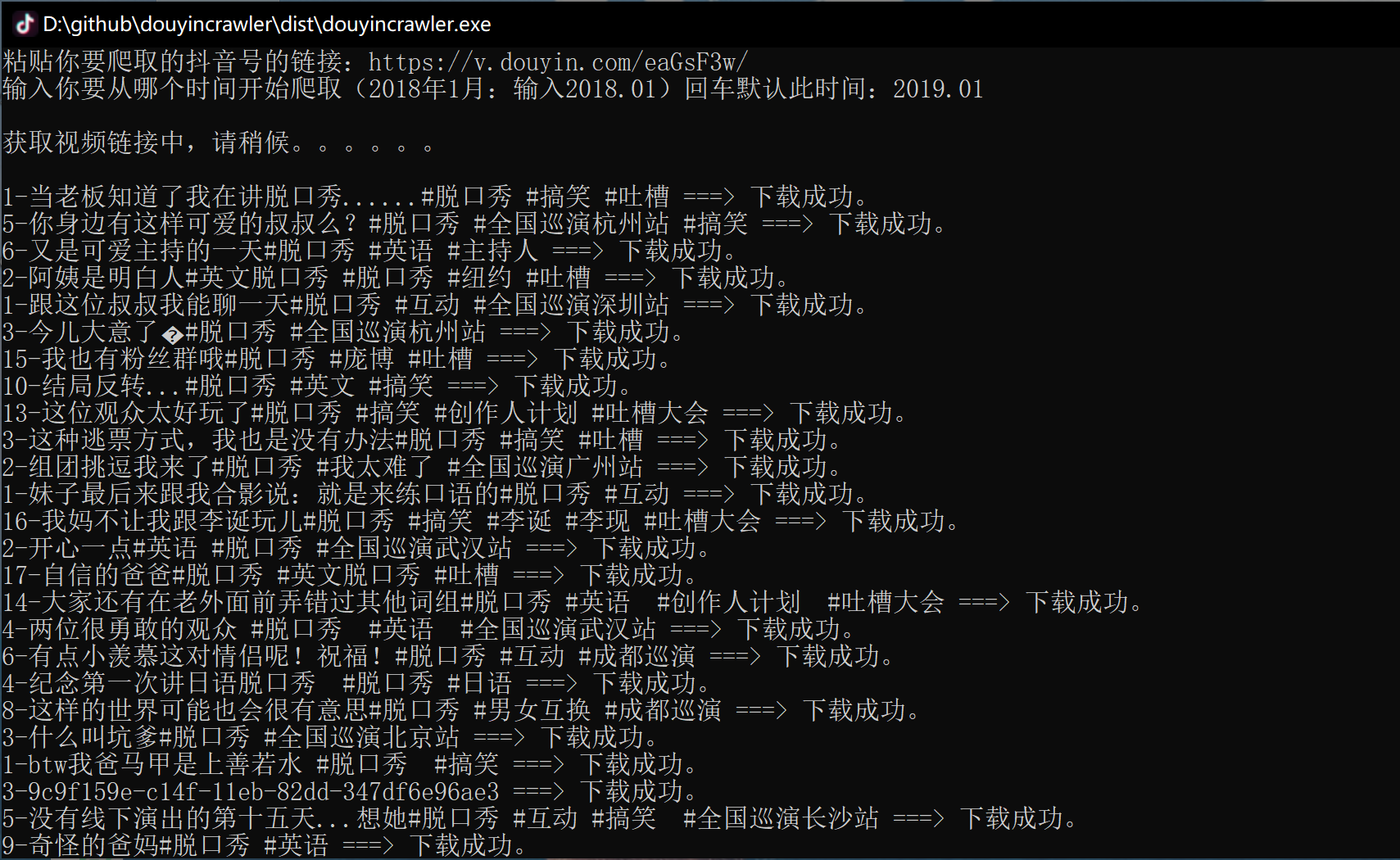

Traceback (most recent call last):

File "C:\Users\Administrator\Desktop\douyincrawler\douyincrawler.py", line 119, in

print(res.result())

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python39\lib\concurrent\futures_base.py", line 438, in result

return self.__get_result()

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python39\lib\concurrent\futures_base.py", line 390, in __get_result

raise self._exception

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python39\lib\concurrent\futures\thread.py", line 52, in run

result = self.fn(*self.args, **self.kwargs)

File "C:\Users\Administrator\Desktop\douyincrawler\douyincrawler.py", line 49, in get_video

with open(title, 'wb') as v:

OSError: [Errno 22] Invalid argument: '打了杯咖啡就知道败家/2022.04-7/1-现在是不是已经不流行文青了\U0001f979 \n九叶重

楼二两,冬至蝉蛹一钱,煎入隔年雪,可医世人相思疾苦,可重楼七叶一枝花,冬至何来蝉蛹,雪又怎能隔年,原是 相思无解!\n殊

不知,夏枯即为九重楼,掘地三尺寒蝉现,除夕子时雪,落地已隔年。相思亦可解….mp4'